In this blog we will discuss about Spark 1.6 Core Functionality and provides a quick introduction to using Spark. It demonstrates the basic functionality of RDDs. Later on we demonstrate Spark SQL and DataFrame API functionality. We have tried to cover basics of Spark 1.6 core functionality and programming contexts.

Introduction to Apache Spark

Spark is a powerful open source processing engine built around speed, ease of use, and sophisticated analytics.It is a cluster computing framework originally developed in the AMPLab at University of California, Berkeley but was later donated to the Apache Software Foundation where it remains today. Apache Spark is a lightning-fast cluster computing technology, designed for fast computation. It is a framework for performing general data analytics on distributed computing cluster like Hadoop. The main feature of Spark is its in-memory cluster computing that increases the processing speed of an application. It provides in memory computations for increase speed and data process over map reduce.It runs on top of existing Hadoop cluster and access Hadoop data store (HDFS), can also process structured data in Hive and Streaming data from HDFS, Flume, Kafka, Twitter.

Features of Apache Spark

Some of Spark’s features which are really highlighting it in the Big Data world.

1. Speed

Spark can be 100x faster than Hadoop for large scale data processing by exploiting in memory computing and other optimizations.

2. Ease of Use

This helps developers to create and run their applications on their familiar programming languages and easy to build parallel apps.

3.Combine SQL, Streaming & Complex Analytics.

In addition to simple “map” and “reduce” operations, Spark supports SQL queries, streaming data, and complex analytics such as machine learning and graph algorithms out-of-the-box. Not only that, users can combine all these capabilities seamlessly in a single work-flow.

4. Advanced Analytics

Spark not only supports ‘Map’ and ‘Reduce’ But it also supports SQL queries, Streaming data, Machine learning (ML), and Graph algorithms.

5. A Unified Engine

Spark comes packaged with higher-level libraries, including support for SQL queries, streaming data, machine learning and graph processing.

6.Runs Everywhere

Spark runs on Hadoop, Mesos, Standalone, or in the cloud and it can access diverse data sources including HDFS, Cassandra, HBase, S3.

Spark Core

Spark Core is the basic functionality of Spark, including components for fault recovery, memory management, interacting with storage systems and more.

Initializing Spark

You first need to build a SparkConf object before to create a SparkContext. SparkConf contains information about your application . The appName parameter is a name for your application to show on the cluster UI. master is a Spark, Mesos or YARN cluster URL, or a special “local” string to run in local mode.

val conf = new SparkConf().setAppName("appName").setMaster("master')

new SparkContext(conf)

Create RDDs

To create RDDs are two ways− First is parallelizing an existing collection in your driver program, or referencing a dataset in and second is an external storage system.

Create RDDs using parallelize() method of SparkContext

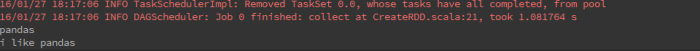

val lines = sc.parallelize(List("pandas", "i like pandas"))

lines.collect().map(println)

Create RDDs using External Datasets textFile() method of SparkContext

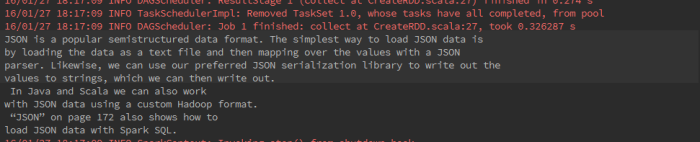

//Load our input data

val inputFile = sc.textFile("src/main/resources/test_file.txt")

//Split it up into words

val count = inputFile.flatMap(line => line.split(" ")

//Transform into pairs and coun

.map(word => (word, 1)).reduceByKey(_ + _)

RDDs Operations

The concept of a resilient distributed dataset (RDD), which is a fault-tolerant collection of elements that can be operated on in parallel. RDDs support two types of operations: transformations, which create a new dataset from an existing one, and actions, which return a value to the driver program after running a computation on the dataset.

RDDs support two types of operations:

1.Transformations

Transformations are operations on RDDs that return a new RDD. Some basic common transformations functions supported by Spark.

map()

Apply a map() function to each element in the RDD and return an RDD of the result.

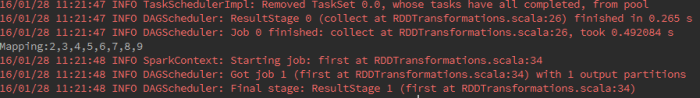

val input1 = sc.parallelize(List(1, 2, 3, 4, 5, 6, 7, 8, 9)) val result1 = input.map(x => x + 1) println(“Mapping:” + result1.collect().mkString(“,”))

filter()

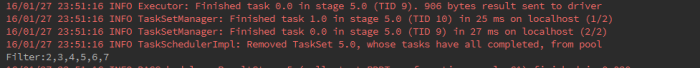

filter() transformation function Return an RDD consisting of only elements

that pass the condition passed to filter()

val filterInput = sc.parallelize(List(1, 2, 3, 4, 5, 6, 7)) val filterResult = filterInput.filter(x => x != 1) println(“Filter:” + filterResult.collect().mkString(“,”))

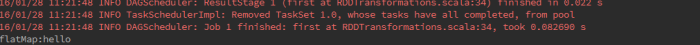

flatMap()

Similar to map, but each input item can be mapped to 0 or more output items (so function should return a Seq rather than a single item).

val flatMapLines = sc.parallelize(List(“hello world”, “hi”)) val flatMapResult = inputLine.flatMap(line => line.split(” “)) println(“flatMap:” + flatMapResult.first())

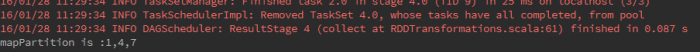

mapPartitions()

Similar to map, but runs separately on each partition (block) of the RDD.

val inputData = sc.parallelize(1 to 9, 3) val inputData1 = sc.parallelize(1 to 9) val mapPartitionResult = inputData.mapPartitions(x => List(x.next).iterator) println(“mapPartition is :” + mapPartitionResult.collect().mkString(“,”))

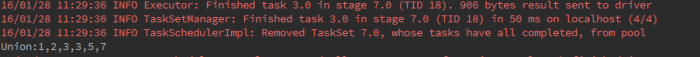

union()

Returns a new dataset that contains the union of the elements in the source dataset and the argument.

val inputRdd1 = sc.parallelize(List(1, 2, 3)) val inputRdd2 = sc.parallelize(List(3, 5, 7)) val resultInputUnion = inputRdd1.union(inputRdd2) println(“Union:” + resultInputUnion.collect().mkString(“,”))

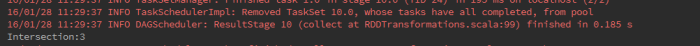

intersection()

Returns a new RDD that contains the intersection of elements in the source dataset and the argument.

val inputRdd1 = sc.parallelize(List(1, 2, 3)) val inputRdd2 = sc.parallelize(List(3, 5, 7)) val resultIntersection = inputRdd1.intersection(inputRdd2) println(“Intersection:” + resultIntersection.collect().mkString(“,”))

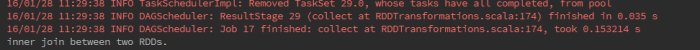

join()

When called on datasets of type (K, V) and (K, W), returns a dataset of (K, (V, W)) pairs with all pairs of elements for each key.

val inputTupple1 = sc.parallelize(List((1, 2), (3, 4), (4, 7))) val inputTupple2 = sc.parallelize(List((5, 9))) val resultJoin = inputTupple1.join(inputTupple2) println(“inner join between two RDDs.” + resultJoin.collect().mkString(“,”))

2.Actions

Actions are operations that return a result to the driver program or write it to storage Some basic common actions fuctions supported by Spark.

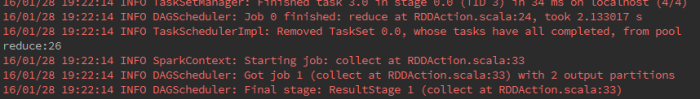

reduce()

reduce(func) Combine the elements of the RDD together in parallel.

val input = sc.parallelize(List(3, 2, 4, 6)) val inputs= sc.parallelize(List(2, 4, 2, 3)) val rUnion = input.union(inputs) val resultReduce = rUnion.reduce((x, y) = x + y) println(“reduce:” + resultReduce + ” “)

collect()

collect() Return all elements from the RDD.

val inputElement = sc.parallelize(List(2, 3, 4, 4)) println(“collect” + inputElement.collect().mkString(“,”))

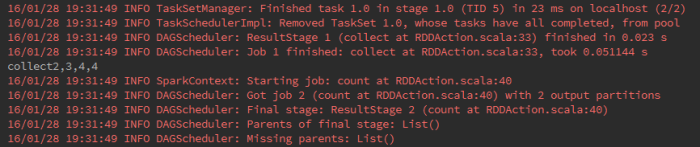

count()

count() returns a count of the elements the RDD.

val inputCount = sc.parallelize(List(2, 3, 4, 4)) println(” count:” + inputCount.count())

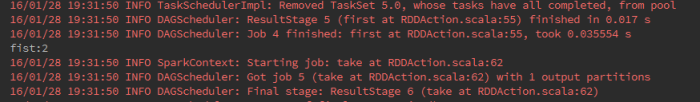

first()

Returns the first element of the dataset (similar to take (1)).

val inputFirst =sc.parallelize(List(2, 3, 4, 4)) println(“fist:”+ inputFirst.first())

take()

take(num) Return num elements from the RDD.

val inputTake = sc.parallelize(List(2, 3, 4, 4)) println(“take :” + inputTake.take(2).mkString(“,”))

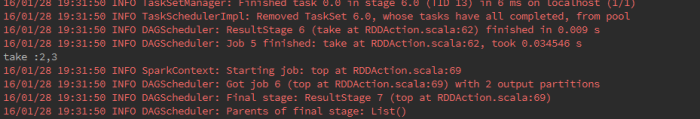

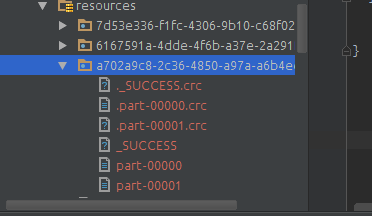

saveAsTextFile()

Write the elements of the dataset as a text file (or set of text files) in a given directory in the local filesystem.

val inputFile = sc.textFile("src/main/resources/test_file.txt") // Load our input data.

val count = inputFile.flatMap(line => line.split(" ")) // Split it up into words.

.map(word => (word, 1)).reduceByKey(_ + _) // Transform into pairs and count.

//Save the word count back out to a text file, causing evaluation.

count.saveAsTextFile("src/main/resources/${UUID.randomUUID()}")

println("OK")

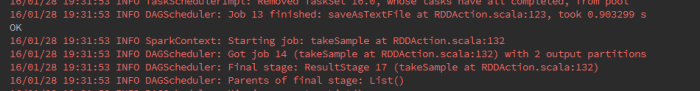

foreach()

Runs a function func on each element of the dataset.

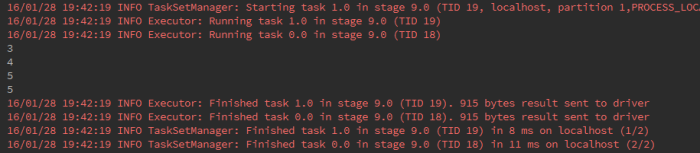

val inputForeach = sc.parallelize(List(2, 3, 4, 4)) inputForeach.foreach(x => println(x + 1))

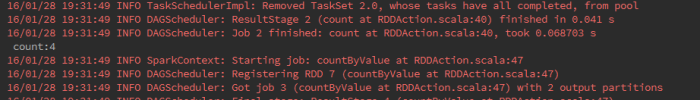

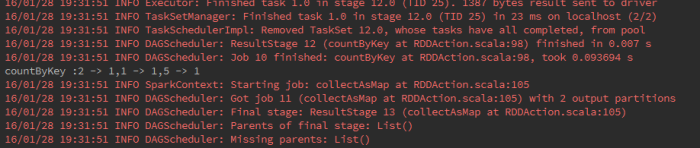

countByKey()

Count the number of elements for each key.

val inputAction = sc.parallelize(List((1, 2), (2, 3), (5, 4))) println("countByKey :" + inputAction.countByKey().mkString(","))

This is the start of Spark Tutorial, from next week onwards we would be working on this topic to make it grow. We would look at how we can create more useful tutorial into it , then we would be adding more content to it together. If you have any suggestion feel free to suggest us 🙂 Stay tuned.

Reblogged this on Anand Kumar Singh.

LikeLike

Reblogged this on malti yadav.

LikeLiked by 1 person

[…] Tutorial 1 […]

LikeLiked by 1 person